Overview of Autonomous Vehicle

There are various ways to implement how an autonomous vehicle perceives its surroundings, makes decisions, and carries out directions. The degree of autonomy can be calculated based on all the tasks that the vehicle completes. All of these implementations, though, share basic behavior at the system level.

Due to the various methods OEMs utilize to enable self-driving technology, the extent of vehicle autonomy can be a confusing topic. The five levels of autonomy established by the Society of Automotive Engineers (SAE) are currently regarded as the industry standard. These five tiers are summed up in the figure below:

In general, Levels 1 and 2 help the driver with certain active safety features, which can come in handy in dire situations. The features only serve to improve the safety and comfort of driving—the human being is still the principal operator.

The driving becomes automatic at level 3, but under certain restricted driving circumstances, the operational design domain (ODD) is defined by these circumstances. The vehicle’s ODD increases until it reaches level 5 as the machine learning algorithms within learn how to handle edge circumstances (bad weather, unpredictable traffic, etc.).

Anatomy of a Self-Driving Car

1. Sensing system

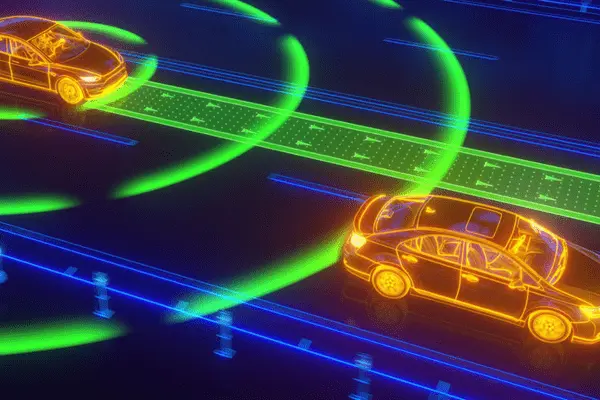

In the architecture of autonomous vehicles, sensors are the first significant system. They observe the environment and supply the information needed to locate the car on a map. Multiple sensors are needed for any autonomous vehicle. These include cameras for computer vision-based object detection and classification, LiDARs for building 3D point clouds of the environment to identify objects precisely, radars for determining the direction and speed of other vehicles, inertial measurement units for assisting in determining the direction and speed of the vehicle, GNSS-RTK systems (such as GPS) for localizing the vehicle, ultrasonic sensors for low-range distance measurement, and many more. Positioning each sensor around the vehicle body to provide 360° sensor coverage—which aids in object detection even in the blind spot—is a crucial design factor.

The placement of several sensors to guarantee there are few blind spots is depicted in the graphic.

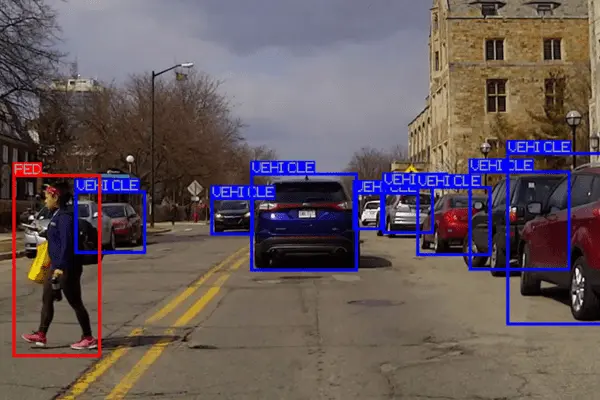

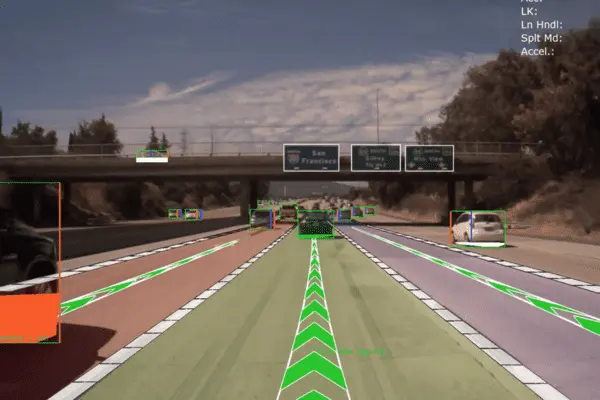

2. Perception

An autonomous car has a lot of sensors; therefore, to comprehend and perceive its environment, data from several sensors must be combined (a process known as sensor fusion). This requires knowing the location of the road (semantic segmentation), how things can be categorized (supervised object detection), and the position, velocity, and direction of motion of each object (car, pedestrian, etc.) (Tracking). Perception is the key to comprehending all of this.

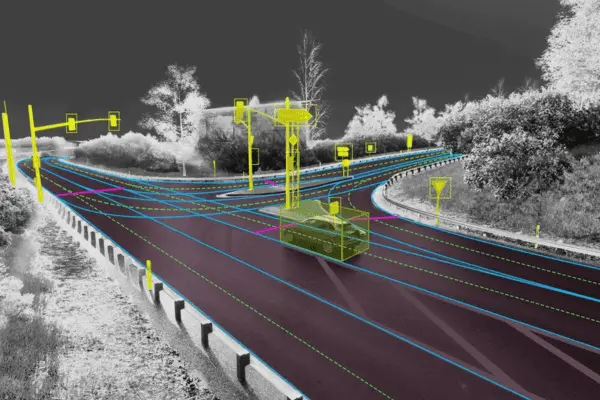

3. Map-making and Localization

During the localization process, the car makes extremely precise three-dimensional maps of its surroundings using sensor data, and it displays the location of the vehicle on the map in real-time. Every sensor offers a different perspective on the environment, which aids in the system’s mapping of its surroundings. The host car is then located with the assistance of these maps by comparing it with the recently obtained sensor data. Depending on the sensor being used, a variety of localization techniques can be applied.

Whereas vision-based localization makes use of images, LiDAR-based localization compares its point clouds with the available 3D maps. To get high-fidelity findings, many localization algorithms additionally link Real-Time Kinematic Positioning (RTK) with a GNSS (Global Navigation Satellite System) and combine the estimates with readings from an inertial measurement unit (IMU) sensor.

4. Prediction and planning

The system must first anticipate the actions of other dynamic objects to design a route for the car to follow after localizing the vehicle. This problem can be solved by regression algorithms like decision trees, neural networks, and Bayesian regression, among others.

Subsequently, the planning block utilizes the intelligence of the vehicle to comprehend its condition in the surroundings and devise strategies to arrive at the desired location. This covers both local and global planning (e.g., the route to follow while traveling from point A to point B) and decisions on whether to halt at an intersection or plan an overtaking maneuver.

Lastly, the control block is where the actuators get the commands from the driver to carry out the plan that was decided upon in the preceding block. Typically, a feedback system works in tandem with the controller to continuously monitor how closely the car is adhering to the plan. The control mechanism can also vary depending on the type of movement to be performed and the required level of automation. Among other things, these techniques can be proportional-integral-derivative (PID), model predictive control (MPC), or linear quadratic regulators (LQR).

Dorle Controls: Development and Testing of Autonomous Systems

Our areas of expertise at Dorle Controls include functional safety, simulation, feature integration, testing, and application software development for autonomous systems. This comprises applications of data annotation services, neural networks, image processing, sensor fusion, and computer vision, along with features including AEB, ACC, LKA, LCA, FCW, park assist, road texture classification, driver monitoring, and facial landmark tracking. Write to info@dorleco.com to learn more.