Introduction

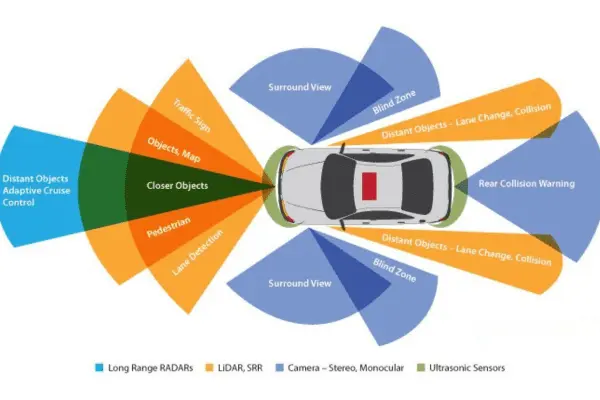

Multiple sensors with different specifications, operating circumstances, and ranges are needed for autonomous vehicles. Though they are susceptible to weather variations, cameras and vision-based sensors aid in the provision of data for recognizing specific objects on the road. Radar sensors are excellent in practically all weather conditions, but they cannot produce a precise three-dimensional representation of the environment. LiDAR sensors are costly, yet they provide a highly accurate map of the vehicle’s surroundings.

The Need for Integrating Multiple Sensors

Although each sensor has a distinct function, an autonomous car cannot use any one of them on its own. An autonomous car requires information from several sources to increase accuracy and gain a greater grasp of its surroundings if it is to make decisions that are comparable to or, in some situations, even superior to those that a human brain makes.

Sensor fusion, therefore, becomes a crucial element.

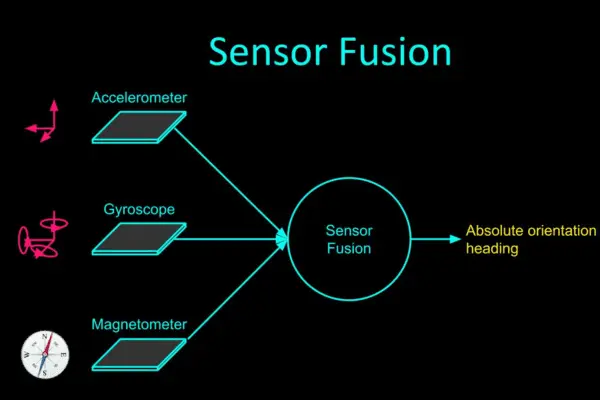

Sensor Fusion

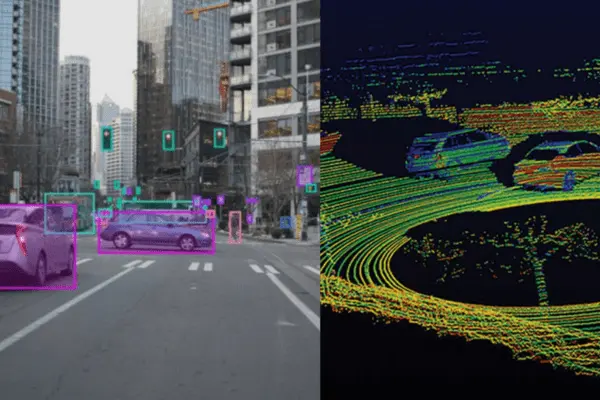

In essence, sensor fusion refers to using all of the information gathered from the sensors positioned throughout the body of the car to inform judgments. This mostly aids in lowering the level of uncertainty that could otherwise be there when using individual sensors.

As a result, this combination aids in addressing each sensor’s shortcomings and creating a strong sensing system. In typical driving situations, sensor fusion typically increases system redundancy significantly. This indicates that different sensors are picking up on the same objects.

However, fusion helps guarantee that no items are missed when one or more sensors are inaccurate. For example, in perfect weather, a camera can record the surroundings of a moving car. That being said, the camera won’t provide enough information to the system when there is a lot of fog or rain. Here’s where LiDAR sensors and radar come in handy. In addition, the radar sensor might precisely detect a truck at the intersection where it stops at a red light.

It might not be able to produce data from a three-dimensional perspective, though. This is a necessity for LiDAR. Therefore, while in perfect circumstances, detecting the same thing with many sensors can appear redundant, in edge cases such as poor weather, we require sensor fusion.

Levels of Sensor Fusion

1. Low-Level Fusion, or Initial Fusion:

When using this type of fusion method, one computer unit combines all the data from all of the sensors before processing it. For instance, to determine the size and form of an item spotted, cameras fuse pixels, and LiDAR sensors fuse point clouds. Since this method transmits all of the data to the processing unit, it has a wide range of potential uses. As a result, different algorithms might make use of distinct data elements. The intricacy of processing is a disadvantage of transporting and managing such massive volumes of data. The cost of the hardware configuration will increase since high-quality processing units are necessary.

2. Fusion at the Mid-Level:

In mid-level fusion, the algorithm utilizes the data after the individual sensors have initially spotted the objects. Researchers typically fuse this data using a Kalman filter (described later in the course). The idea is to have, let’s say, a camera and a LiDAR sensor detect an obstacle individually and then fuse the results from both to get the best estimates of the vehicle’s position, class, and velocity. Although this is a simpler procedure to execute, if a sensor fails, there is a possibility that the fusion process will not succeed.

3. High-Level Fusion (Late Fusion):

This is comparable to the mid-level approach, with the exception that we fuse the outcomes after implementing detection and tracking algorithms for every single sensor. The issue is that tracking issues with one sensor could impact the fusion as a whole.

Additionally, there are various kinds of sensor fusion. To maintain consistency, competitive sensor fusion involves having different types of sensors generate data on the same object. Through the employment of two sensors, complementary sensor-fusion will be able to create a larger picture than either sensor could on its own. Coordinated fusion will raise the data’s quality. For instance, to create a 3D representation of a 2D object, take two distinct viewpoints of it.

Variation in the approach of sensor fusion

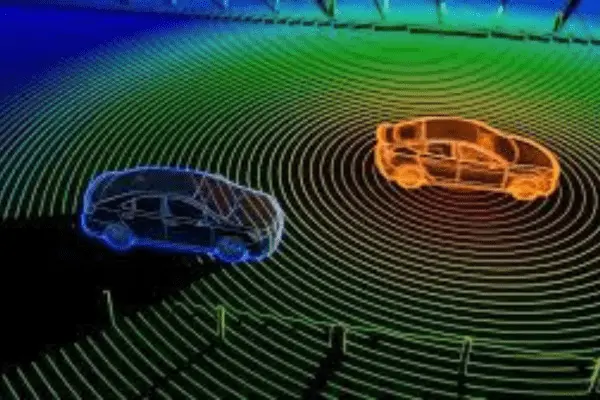

1. Radar-LiDAR Fusion

A single method cannot solve sensor fusion since different sensors have diverse functions. You can apply the mid-level sensor-fusion approach if you need to fuse a LiDAR and a radar sensor.

This entails combining the elements and concluding afterward.

This method can make use of a Kalman filter. This involves using a “predict-and-update” technique, in which we update the predictive model to produce a better result in the following iteration depending on the previous prediction and the current measurement.

The following illustration illustrates this in an easier-to-comprehend manner.

The problem with the radar-LiDAR sensor is that LiDAR data is linear, whereas the data from the radar sensor is non-linear. Therefore, before the team fuses the non-linear radar sensor data with the LiDAR data, they must linearize the radar data and then update the model appropriately.

You can use an extended Kalman filter or an unscented Kalman filter to linearize the radar data.

2. Fusion of Camera and LiDAR

Now, low-level fusion—which combines raw data—as well as high-level fusion—which combines objects and their positions—can be applied to systems that require the fusion of a camera and a LiDAR sensor. In both situations, the outcomes differ somewhat. The ROI (region of interest) matching technique and data overlap from both sensors make up the low-level fusion. The camera detects the things in front of the vehicle using its pictures, while the system projects the 3D point cloud data of the objects obtained from the LiDAR sensor onto a 2D plane.

Next, we superimpose these two maps to see if there are any common regions. The same object, identified by two separate sensors, is indicated by these similar regions. Data is first processed for high-level fusion, after which 2D camera data is transformed into 3D object detection. Following a comparison of this data with the LiDAR sensor’s 3D object detection data, the output (IOU matching) is determined by where the two sensors’ intersecting regions are.

Development of Autonomous Vehicle Features at Dorleco

Among the many things we do at Dorleco is developing sensor and actuator drivers. We can help you with full-stack software development or custom feature development.

Please send an email to info@dorleco.com for more information about our services and how we can help you with your software control needs. You can also connect with us for all VCU-related products.