Introduction

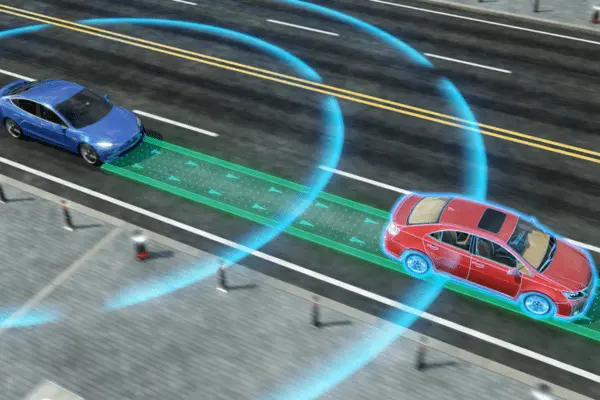

The entire discussion on sensors in autonomous vehicles consists of one major point: will the vehicle’s brain (i.e., the computer) be able to make decisions just like a human brain does? Whether a computer can make these decisions is an altogether different topic. Still, it is just as important for an automotive company working on self-driving technology to provide the computer with the necessary and sufficient data to make decisions.

This is where sensors, particularly their integration with the computing system, come into the picture.

Sensor Types in Self-Driving Cars:

Autonomous vehicles employ three primary types of sensors to map their surroundings: radio-based (radar), vision-based (cameras), and light- and laser-based (LiDAR).

The following is a quick explanation of these three types of sensors found in autonomous vehicles:

1. Cameras

A 360° view of the vehicle’s surroundings can be obtained by installing high-resolution video cameras in various places around the body of the vehicle. They take pictures and give the information needed to recognize various items on the road, like people, traffic lights, and other cars.

The ability to precisely identify things is the primary benefit of utilizing data from high-resolution cameras, and this ability is utilized to map 3D representations of the environment around the vehicle. However, in bad weather—such as at night or during periods of intense rain or fog—these cameras don’t function as precisely.

Sensors based on vision that are used:

Monocular vision sensor

one-sided vision One camera is used by sensors in autonomous vehicles to assist in the detection of moving objects, such as pedestrians and cars. This system will only detect categorized things because it mostly depends on object classification. By simulating millions of kilometers of travel, these algorithms may be trained to identify and categorize items.

It identifies items by comparing their sizes to those that are recorded in its memory. As an illustration, suppose that an object is identified by the system as a truck. The technology may compare the size of the new truck with the size of the old truck and determine the distance based on this information. On the other hand, an object that the system is unable to classify will remain unnoticed. Developers of autonomous systems are quite concerned about this.

Stereo vision sensor

A dual-camera arrangement seen in a stereo vision system aids in precisely estimating the distance to an object even when the system is unable to identify what it is. The device serves as a human eye and aids in determining an object’s depth because it has two separate lenses. The system can use triangulation to determine the distance between the object and the camera because the two lenses record slightly different images.

Radar Sensors for Self-Driving Cars

Autonomous vehicles equipped with radar sensors employ radio waves to scan their surroundings and determine the precise size, angle, and velocity of objects. The object’s size, velocity, and distance from the host vehicle are all determined by the sensor, which emits radio waves through a transmitter inside and measures how long it takes for the waves to be reflected.

Radar sensors have been employed in ocean navigation and weather forecasting in the past. This is because it outperforms vision-based sensors due to its very consistent performance under a wide range of weather conditions.

One way to categorize RADAR sensors is by their operational distance ranges:

The main benefit of short-range radar (SRR) sensors is that they can detect pictures with a high degree of resolution, covering a range of 0.2 to 30 meters.

This is crucial since, at low resolutions, it might not be possible to identify a pedestrian who is in front of a larger item.

MRR (Medium Range Radar): 30 to 80 meters

Long-Range Radar (LRR): (80m to more than 200m) These sensors work well with highway Automatic Emergency Braking (AEB) and Adaptive Cruise Control (ACC) systems.

2. LiDAR sensors

There are certain advantages of LiDAR (Light Detection and Ranging) over radar and vision-based sensors. It sends out thousands of fast-moving laser light waves, and when those waves are reflected, they provide a far more precise impression of the size, distance, and other characteristics of a vehicle. Numerous pulses produce unique point clouds, which are collections of points in three dimensions; hence, a LiDAR sensor may also provide a three-dimensional image of an object.

LiDAR sensors frequently have excellent precision when detecting small objects, which enhances object identification accuracy. Furthermore, LiDAR sensors can be set up to provide a 360° view of the surroundings of the car, negating the need for several identical sensors.

The disadvantage is that LiDAR sensors have a complicated architecture and design, which means that adding one to a car can result in a multiplication of manufacturing costs. Furthermore, the high processing power requirements of these sensors make it challenging to combine them into a small design. The majority of LiDAR sensors operate at 905 nm, which allows for precise data collection in a limited field of view up to 200 m.

A few businesses are also developing 1550 nm LiDAR sensors, which will be even more accurate over a greater distance.

3. Ultrasonic Sensors

The majority of uses for ultrasonic sensors in autonomous vehicles occur in low-speed driving scenarios. Ultrasonic sensors are found in the majority of parking assist systems because they can accurately measure the distance between an object and a car, regardless of the object’s size or shape.

The transmitter-receiver configuration makes up the ultrasonic sensor. The ultrasonic sound waves are sent by the transmitter, and the distance to the obstacle is computed from the time interval between the wave’s transmission and reception.

Ultrasonic sensors have a precise distance measurement range of a few millimeters to five meters. Additionally, they can detect objects at very close range from the car, which can be quite useful when parking. Additionally, ultrasonic sensors can be utilized to identify environmental factors and facilitate vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I) communication. Sensor data from thousands of these networked cars provides reference data for various situations, conditions, and places, and can aid in the development of algorithms for autonomous vehicles.

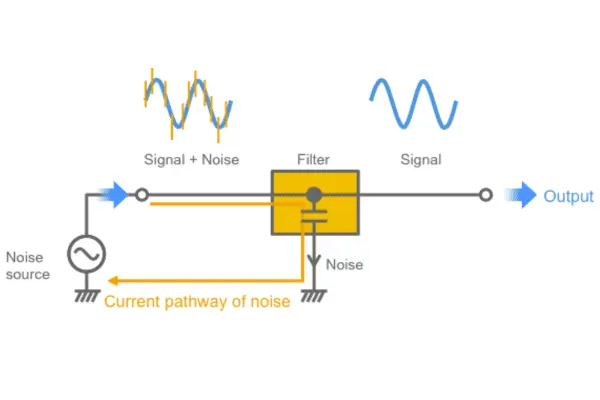

4. Filtering Out Noise

Unwanted noise or interference in the surroundings is the primary source of uncertainty created by the use of individual sensors. Naturally, every piece of data that is detected by a sensor in the environment is made up of two parts: the noise, which we want to ignore, and the signal, which we require.

However, the uncertainty comes from not knowing how much noise there is in any given set of data. Generally speaking, high-frequency noise can lead to significant distortions in sensor data. Such high-frequency noise must be eliminated if we are to have the most precise signal possible. Linear (like the simple moving average) and non-linear (like the median) filters are the two categories of noise filters.

The noise filters that are most often utilized include:

A low-pass filter attenuates signals that are greater than the cut-off frequency while passing signals that have frequencies lower than the cut-off.

A high-pass filter attenuates signals that are lower than the cut-off frequency while passing signals that are higher than that cut-off frequency.

The Kalman filter, recursive least squares (RLS), and least mean square error (LMS) are a few other popular filters.

Development of Autonomous Vehicle Features at Dorle Controls

Our goal at Dorle Controls is to offer customized software development and integration services for self-driving cars as well. This covers both creating specific applications depending on user needs and creating the full software stack for an autonomous car.

To learn more about our skills in this field, send an email to info@dorleco.com.